Publications

You can also find my articles on my Scholar Profile.

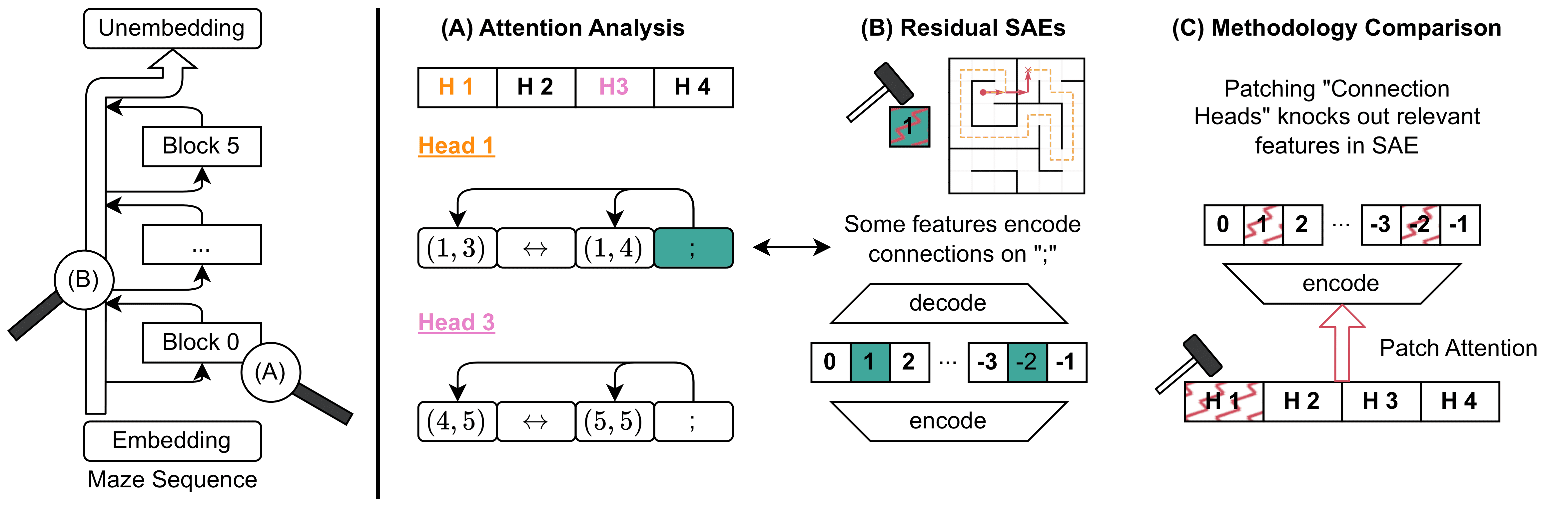

Transformers Use Causal World Models in Maze-Solving Tasks

We identify World Models in transformers trained on maze-solving tasks, using Sparse Autoencoders and attention pattern analysis to examine their construction and demonstrate consistency between feature-based and circuit-based analyses.

Structured World Representations in Maze-Solving Transformers

Transformers trained to solve mazes form linear representations of maze structure, and acquire interpretable attention heads which facilitate path-following.

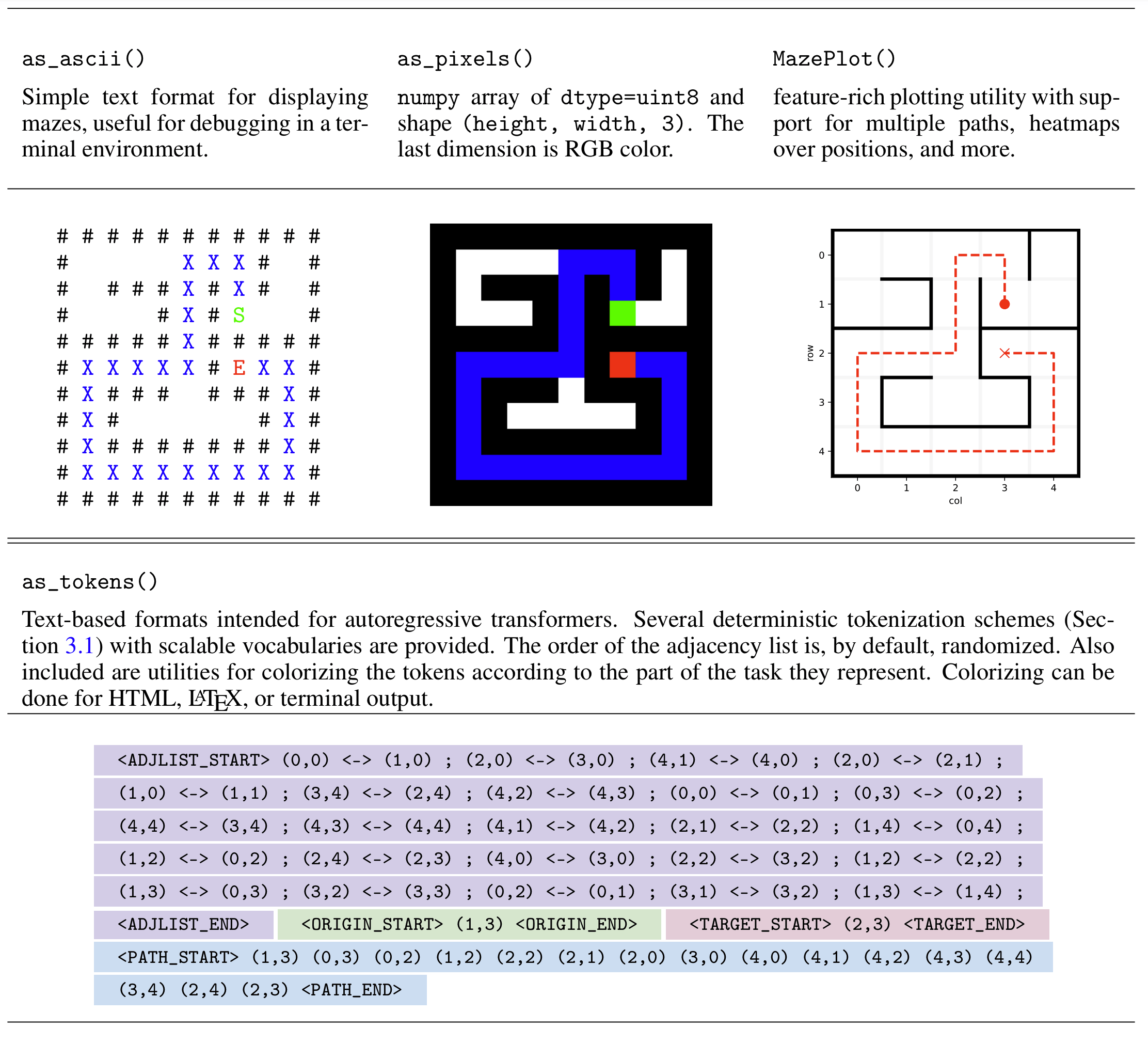

A Configurable Library for Generating and Manipulating Maze Datasets

A dataset to generate simple Maze-like environments for use with Transformers.

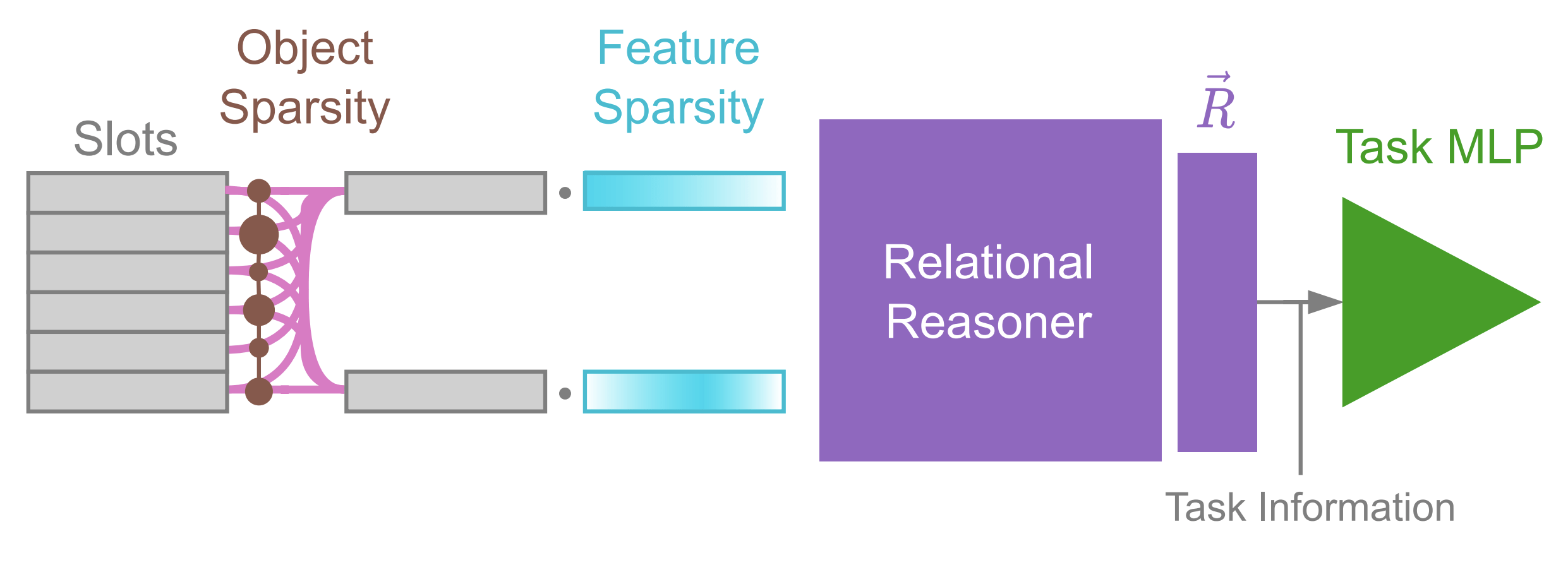

Sparse Relational Reasoning with Object-Centric Representations

Assessing the extent to which sparsity and structured (Obect-Centric) representations are beneficial for neural relational reasoning.

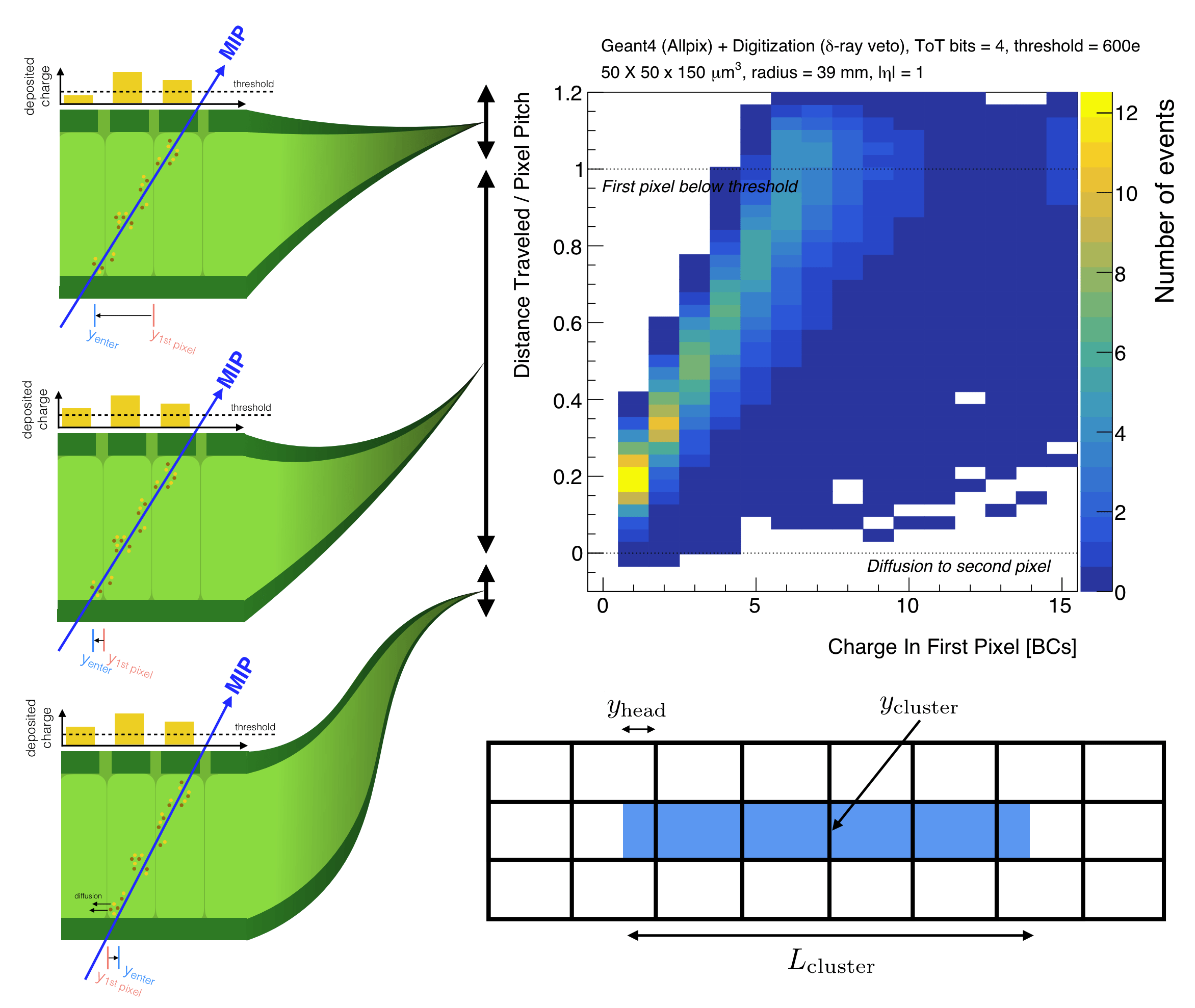

Nonlocal Thresholds for Improving the Spatial Resolution of Pixel Detectors

Investigating the potential use of charge sharing between neighboring pixels in HEP sensors to increase resolution and radiation hardness.